A Beginner's Guide to Differentiable Programming

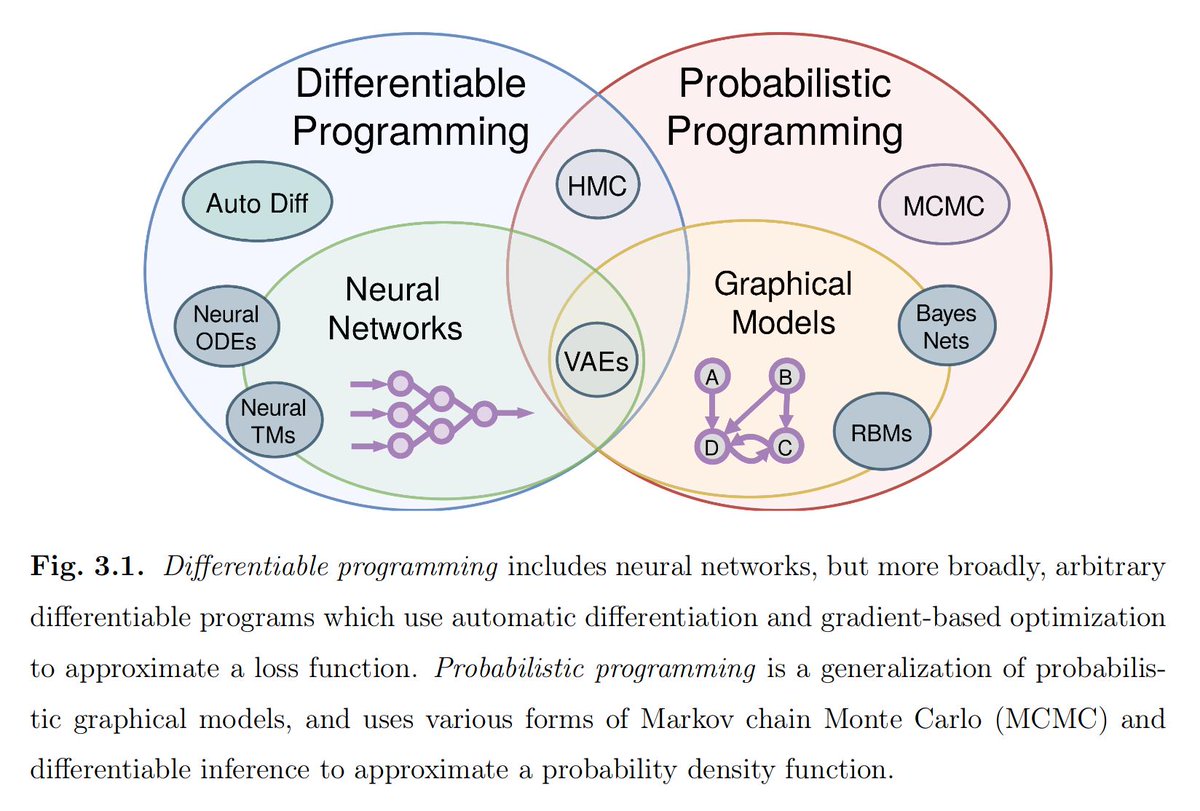

Differentiable programs are programs that rewrite themselves at least one component by optimizing along a gradient, like neural networks do using optimization algorithms such as gradient descent. Here’s a graphic illustrating the difference between differential and probabilistic programming approaches.

Credit: Breandon

Credit: Breandon

Yann LeCun described differentiable programming like this:

“Yeah, Differentiable Programming is little more than a rebranding of the modern collection Deep Learning techniques, the same way Deep Learning was a rebranding of the modern incarnations of neural nets with more than two layers. The important point is that people are now building a new kind of software by assembling networks of parameterized functional blocks and by training them from examples using some form of gradient-based optimization….It’s really very much like a regular program, except it’s parameterized, automatically differentiated, and trainable/optimizable. An increasingly large number of people are defining the networks procedurally in a data-dependent way (with loops and conditionals), allowing them to change dynamically as a function of the input data fed to them. It’s really very much like a regular progam, except it’s parameterized, automatically differentiated, and trainable/optimizable. Dynamic networks have become increasingly popular (particularly for NLP), thanks to deep learning frameworks that can handle them such as PyTorch and Chainer (note: our old deep learning framework Lush could handle a particular kind of dynamic nets called Graph Transformer Networks, back in 1994. It was needed for text recognition). People are now actively working on compilers for imperative differentiable programming languages. This is a very exciting avenue for the development of learning-based AI. Important note: this won’t be sufficient to take us to “true” AI. Other concepts will be needed for that, such as what I used to call predictive learning and now decided to call Imputative Learning. More on this later….”

Differentiable programming will never be the buzzword that deep learning is. It’s too much of a mouthful. LeCun has proposed DP, DiffProg or dProg as less unwieldy substitutes.

In a more recent Reddit AMA, LeCun went on to say:

“With the ability to define dynamic deep architectures (i.e. computation graphs that are defined procedurally and whose structure changes for every new input) is a generalization of deep learning that some have called Differentiable Programming.”

Frankly, dynamic computation graphs for deep neural networks sounds an awful lot like a kind of deep learning.